‘Fire-and-forget’; ‘armchair warfare’; ‘Cyber warriors’. The lingo that permeates military rhetoric nowadays is revealing of the direction warfare has taken in recent times and continues to pursue. Attempts at introducing autonomous innovations into the military are by no means recent, however robotics are beginning to transcend their label of outlandish musings of science-fiction authors, and are considered by many to be the future of warfare.

The ideal in warfare has historically been to carry out objectives while limiting one’s own casualties as much as possible: the advent of widespread and significant technological advancements in the latter half of the 20th century and into the 21st has enabled this on a large scale. Though highly controversial, drone warfare stands out as a prime example: the use of Unmanned Aerial Vehicles (UAVs) enables warfare to be carried out remotely while having only to fear the possible loss of admittedly expensive equipment.

Autonomous weapons have benefits on top of limiting human casualties. Relying on technology theoretically helps lessen the contributions of human beings as concerns the act of carrying out orders. To reduce human agency in favour of robotic semi-autonomy is for many the ideal towards which militaries need to strive, for reasons relating to speed, accuracy and error-reduction.

Take for example the phalanx, often colloquially referred to as the ‘goalkeeper.’ An autonomous weapon system devised as a measure against anti-ship missiles and – along with equivalents – used by navies throughout the world, it epitomises man’s attempt at supplementing warfare with robotics. It is much faster than any gun-operator could ever be, far more accurate, and it enables human beings to take part in the target-engaging process in little more than a supervising role. However, the process of automating warfare and supplementing human decision-making with robotics leads to the worrying possibility of distancing operators too much from the actions they are enabling, thus desensitizing them to the gravity of potential errors. Furthermore, there has been a host of malfunctions which lend legitimacy to sceptics’ arguments that errors simply cannot be eradicated. The phalanx alone was responsible for four accidents in the 1980s and 1990s.

Take for example the phalanx, often colloquially referred to as the ‘goalkeeper.’ An autonomous weapon system devised as a measure against anti-ship missiles and – along with equivalents – used by navies throughout the world, it epitomises man’s attempt at supplementing warfare with robotics. It is much faster than any gun-operator could ever be, far more accurate, and it enables human beings to take part in the target-engaging process in little more than a supervising role. However, the process of automating warfare and supplementing human decision-making with robotics leads to the worrying possibility of distancing operators too much from the actions they are enabling, thus desensitizing them to the gravity of potential errors. Furthermore, there has been a host of malfunctions which lend legitimacy to sceptics’ arguments that errors simply cannot be eradicated. The phalanx alone was responsible for four accidents in the 1980s and 1990s.

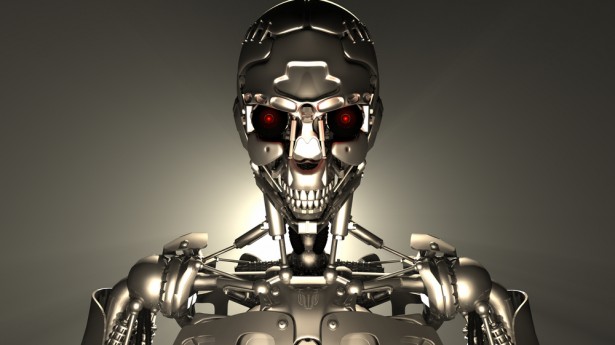

To further complicate the issue, rapid and significant improvements in all things technological raise the ethically-tenuous question of removing human agency from the decision-making process altogether. In basic terms, this would mean creating and using artificial intelligence in the form of fully-autonomous weapon systems.

This is a source of considerable controversy and debate. It is one thing to design and use weapons capable of facilitating a given task; it is an entirely different matter to conceive of weapon-systems capable of selecting and firing upon targets of their own choosing, without any human interference. Though the idea of replacing human approximation with precise arithmetic decision-making may appeal to many within military institutions in a position to afford it, such an admittedly not-too-distant prospect has brought about much debating. The first major conference on the subject took place on May 13, 2014 – the multinational convention on ‘lethal autonomous weapons systems’, under the framework of the UN’s Convention on Certain Conventional Weapons.

During a shooting-exercise at Lohatlha, a software glitch caused a robotic anti-aircraft cannon to begin spraying bullets all around and seemingly of its own accord, killing nine and injuring fourteen – even more terrifying is the fact that this particular gun, an Oerlikon GDF-005, also has the ability to reload on its own.

The fact that such a conference took place is in itself very telling of the extent to which autonomous weapons are deemed not only possible, but achievable in the near-future. Just as important in starting the debate, however, are such incidents as that which took place on October 18, 2007, in South Africa. During a shooting-exercise at Lohatlha, a software glitch caused a robotic anti-aircraft cannon to begin spraying bullets all around and seemingly of its own accord, killing nine and injuring fourteen. Even more terrifying is the fact that this particular gun, an Oerlikon GDF-005, has the ability to reload on its own; it was only after using up all available magazines that it stopped firing.

In such instances, who exactly is to be held accountable? Decommissioning the guilty weapon when it happens is all very well, but who, between the manufacturer and the owner, is responsible for the accident?

A crucial element of the debate is that which concerns human rights laws, international human rights laws and rules of engagement. As impressive a feat as designing and constructing weapons capable of acting of their own accord may be, programming them to faultlessly incorporate such things as the Geneva conventions into decision-making is unfeasible. If we were to take the Martens clause as an example, which has a degree of vagueness purposefully built in so as to allow for interpretation, the sort of linear decision-making that a robot entails would make it very difficult – if not impossible – to accommodate the clause. Furthermore questions hang over whether a robot would be capable of evaluating the proportionality of retaliation, or even of attack in general.

In theory, a robot could be programmed to make the correct decisions in accordance with the laws of war more often than it would violate them, and certainly more often than some might feel comfortable counting on humans to uphold them. However soldiers engage in combat with the benefit of the doubt afforded to them: it is fully expected of them to uphold international conventions. Failure to do so will likely result in reprisals. A robot has no such sense of accountability and therefore justice cannot be used as a deterrent.

The suspicion with which the matter is widely viewed comes down to a multitude of factors: issues of proportionality, law, ethics, accountability and differentiation between combatants and civilians all play their part in making autonomous weapons the subject of heated debate these days.

Ultimately, however, it comes down to one simple question: are we comfortable entrusting robots with the power to decide who lives and who dies on the battlefield?